The Brain Software Review

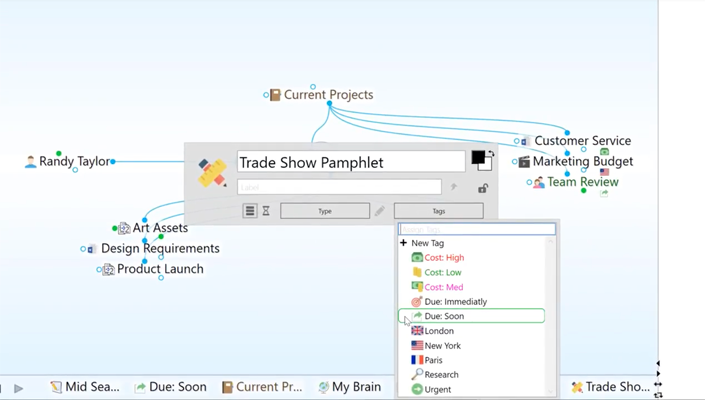

Last month I mentioned that among my perennial favorites in the 'interesting software' category is The Brain, known in a previous incarnation as Personal Brain and out now with a substantially revised The Brain 7. Here's how it looks:

Expert Software Applications Mindomo is the Editors' Choice winner in this review roundup. It has all of the same basic features as XMind and Mindjet MindManager 2016 for Windows, but also. The remarkable feat has given researchers fresh insight into how the brain processes language, and raises the tantalising prospect of devices that can return speech to the speechless.

Steve Zeoli, of Vermont, has over the years proudly identified himself as a sufferer of CRIMP -- Compulsive Reactive Information Manager Purchasing. This is of course the same syndrome I euphemistically describe as 'a taste for 'interesting' software,' and it has led Zeoli, like me, to keep trying every new info-handling system that appears. It has also led him to a series of illuminating chronicles about these programs. My purpose in writing today is to point toward some of his recent entries on the newest edition of The Brain (he is also the source of the screenshot above). They are:

- An overview of the Brain's improvements, strengths, and limits;

- a description of how he uses The Brain as a 'commonplace book';

- how he uses Tinderbox for the same purpose (hey, endlessly trying out new tools for the same job is an 'opportunity,' not a waste or distraction, in CRIMP-land);

- an early Tinderbox-v-Brain smackdown highlighting their respective traits;

- and others you'll see on his site.

If you are interested in software, you will be interested in this.

We want to hear what you think about this article. Submit a letter to the editor or write to letters@theatlantic.com.

Mind-reading software developed in the Netherlands can decipher the sounds being spoken to a person, and even who is saying them, from scans of the listener’s brain.

To train the software, neuroscientists used functional magnetic resonance imaging (fMRI) to track the brain activity of 7 people while they listened to three different speakers saying simple vowel sounds.

The team found that each speaker and each sound created a distinctive “neural fingerprint” in a listener’s auditory cortex, the brain region that deals with hearing.

This fingerprint was used to create rules that could decode future activity and determine both who is being listened to, and what they are saying.

Tell-all brain

“We have [created] a sort of speech-recognition device which is completely based on the brain activity of the listener,” explained Elia Formisano of Maastricht University, who led the group.

The team hopes to match recent advances in using fMRI to identify what a person is looking at from their brain activity. Until now, the best mind-reading feats achieved for auditory brain activity extended only to differentiating between different categories of sounds, such as human voices versus animal cries.

The Brain Software Review

“This is the first study in which we can really distinguish two human voices, or two specific sounds,” Formisano told New Scientist.

Hello, I’m listening

In the course of making that possible, the team made new discoveries about how the brain processes speech.

They found that, whatever sound a person makes, they trigger the same voice fingerprint in the brain. Likewise, a given vowel sound produces the same fingerprint, independent of the speaker

This makes it possible to have software recognise combinations of speakers and sounds that it has not encountered before. It should be possible to teach the system how to recognise all the component sounds of speech, and then recognise full words, Formisano says.

Brain Mapping Software

“Vowel sounds are not meaningful, but they are language,” he says. “These are the building blocks of language.”

Noisy world

The group are now working to make the system robust enough to recognise more complex sounds without training, and in a noisy environment.

“It’s interesting to see fMRI techniques extended to the auditory cortex,” said Kendrick Kay of the University of California, Berkeley, who studies the brain’s visual systems using similar methods and earlier this year created a system that decodes brain activity to reveal what a person is looking at.

The fact that the Netherlands team has shown that the brain deals with the categorisation of speakers and sounds differently is particularly significant, he says.

Office helper

The new approach could help improve voice recognition software, Formisano says, although probably not by leading to a commercially-available system to transcribe conversations from people’s brains.

Instead, the existing mind-reading software will make it possible to break down the human brain’s impressive ability to process sound and voices, even in the face of distracting background noise. Copying those tricks would provide a new way to make voice recognition software much more effective.

Journal reference:Science (DOI: 10.1126/science.1164318)

More on these topics: